Underrated Quick Fix smart actions

While AI chats and agents are all the rage, I think GitHub Copilot’s smart actions in VS Code deserve more attention. Smart actions are little convenience tools sprinkled in various places in the IDE that invoke AI tasks directly.

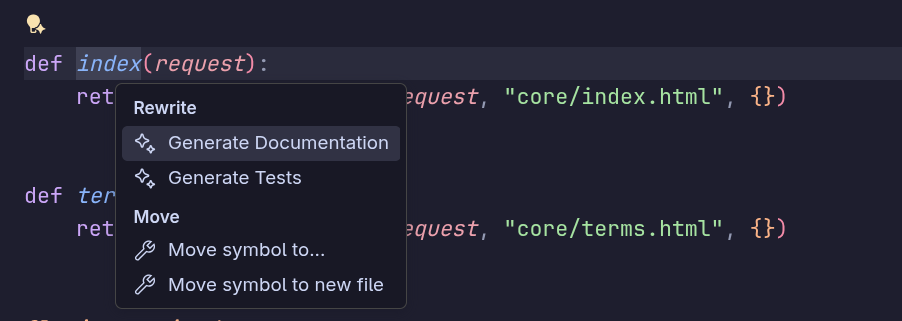

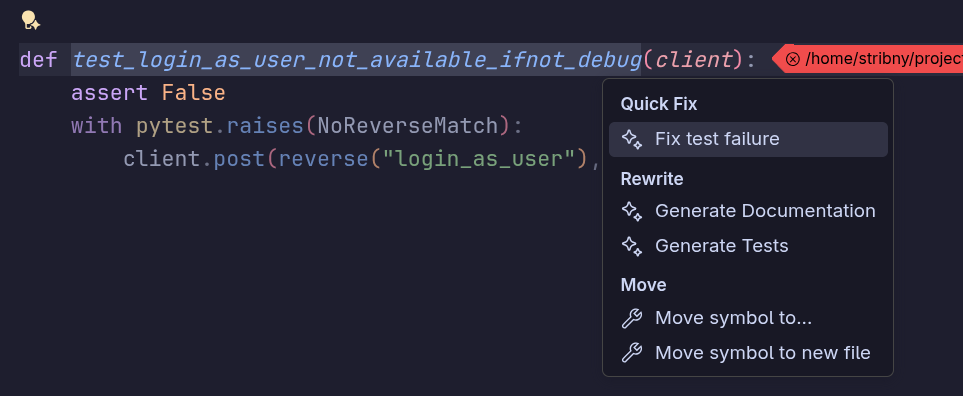

A subset of the smart actions are integrated into the Visual Studio Code Quick Fix menu, which has been Visual Studio Code’s solution to invoke various refactoring tasks (like Extract method, Move symbol, …) based on the cursor position in the code. The best way to invoke it is with a keyboard shortcut (by default Ctrl + .) or by clicking on the lightbulb icon 💡.

Smart actions are helpful whenever we aim for AI-assisted development where writing code manually blends with targeted use of AI tools.

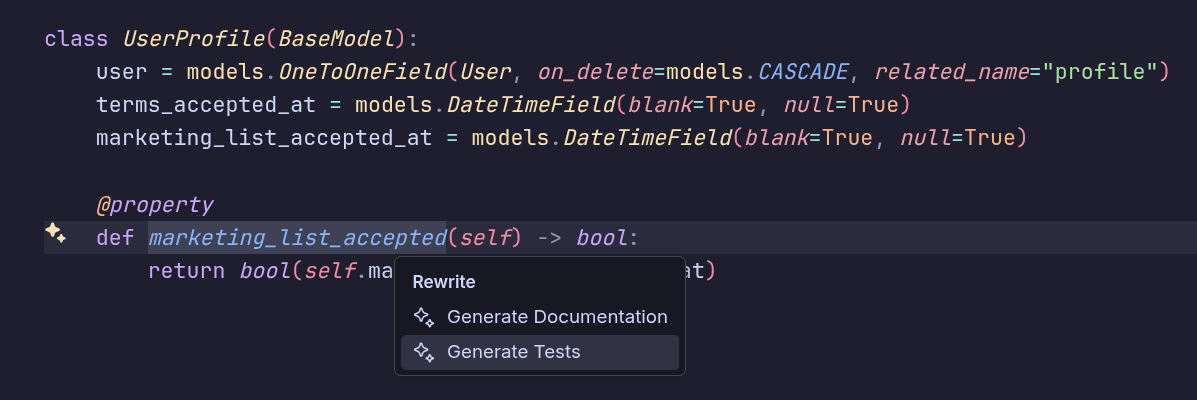

Depending on the context, the Quick Fix menu will typically offer some combination of the following smart actions: Generate, Modify, Review, Generate documentation, Generate tests, Explain, and Fix.

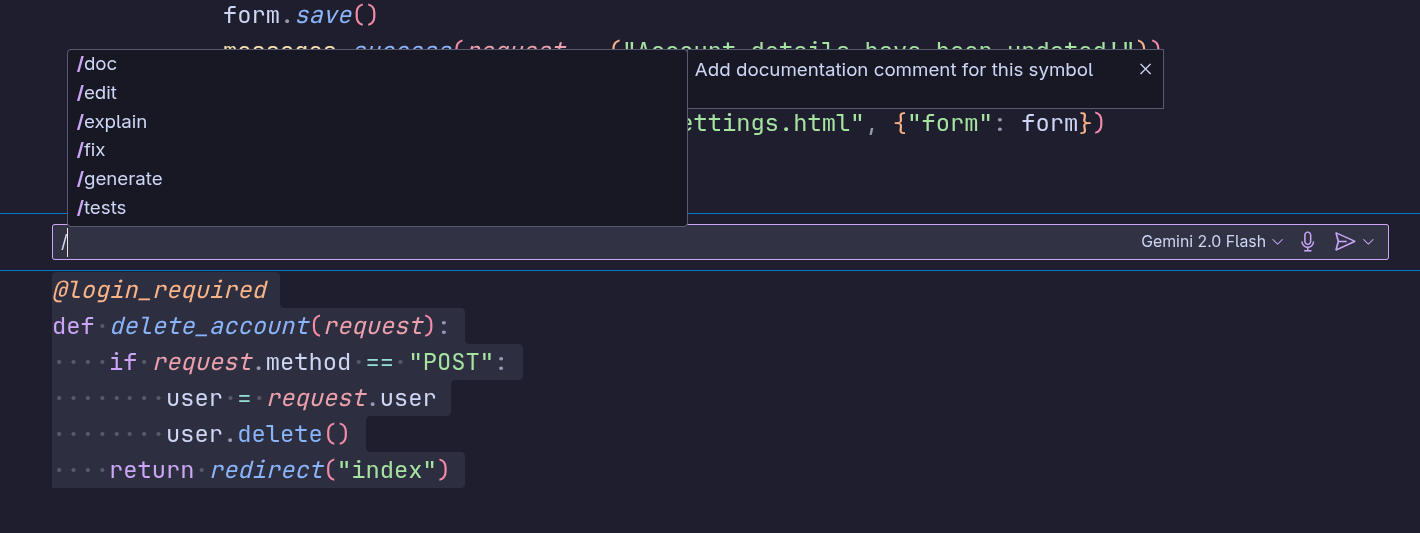

Some actions show a prompt dialog first to provide instructions (Generate…), and some show the same prompt dialog after the action is done (further prompting after action was taken). In both cases you can choose the model to use, use voice commands, or invoke slash commands when it makes sense:

Generate

Generate smart action is available on blank lines for generating new code. This action is the same as any other “generate code” approach, but with the advantage of placing the new code exactly when you want it. Things like writing a new method for a class, adding some logic to an existing function, and so on. Writing new code using the Generate action is faster than using agents for smaller blocks of code.

Modify

Modify smart action is displayed whenever code is selected, perfect for in-place modification and code rewrites. It is like “AI refactoring,” where you can ask for renames (e.g., converting between camelCase and snake_case), rewriting classes to functions and back, converting divs into semantic HTML structure, and so on.

Review

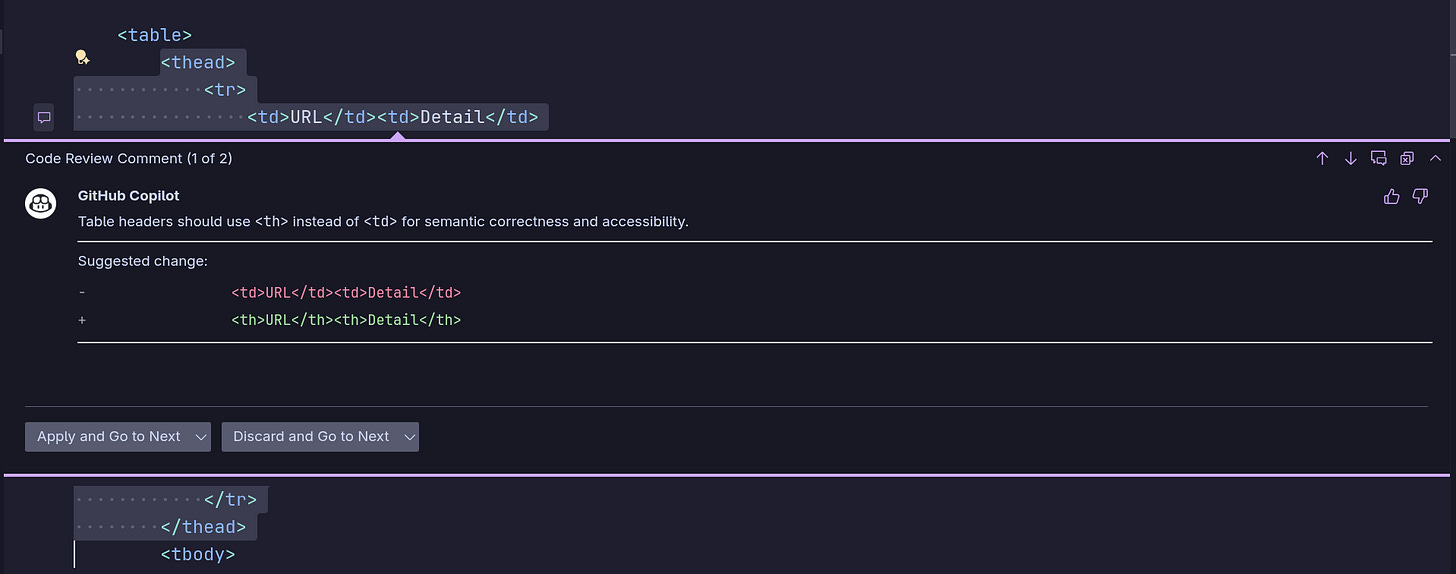

Review smart action instructs the GitHub Copilot to provide a code review when some code has been selected. However, the result is not just textual feedback, but rather a series of proposed enhancements that you can go through. You can accept or reject each of them separately.

One interesting use case for Review smart action is to run it on previously generated code to see if the AI code itself can be improved.

Generate documentation

Generate documentation action appears when the cursor is placed at a symbol that is typically documented (think class and function names). You can easily add language-specific descriptions in the form of, e.g., JSDoc for JavaScript or Python’s docstrings. It is handy when you don’t remember all the syntax for documenting function parameters and other specifics.

Generate tests

Generate tests is displayed when the cursor is placed at symbols that are typically unit tested, like method and function names. It will create one or more tests without providing any description first. After the test code is generated, you will get the ability to further prompt the model for modifications.

Explain

With the Explain action, you can directly get a short summary of what certain code does or explore problems highlighted in the editor. I find it strange that the Explain action is not offered when any code is selected, as it only appears when selected code has a problem identified within or when the cursor is placed directly at some problematic code.

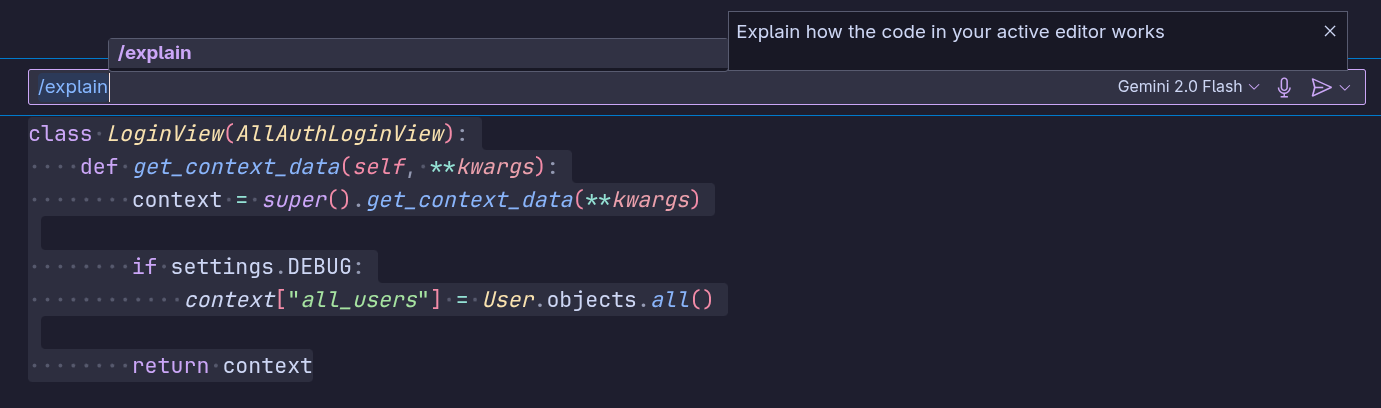

Anyways, you can invoke the Explain action with a small detour even if it is not offered in the Quick Fix menu. Use the slash command /explain in the Modify smart action text area instead:

Fix

Fix action is similar to the Explain action as they are both available for code with already identified problems. So to invoke it, you will need the editor to be aware of the problem first.

There is also the Fix test failure action that can be invoked from within test code that failed. Again, the editor needs to be aware of the problem, so such action will be available only when using the integrated test runner.

I am quite happy with Quick Fix smart actions, as it provides “AI assistance” when writing code manually without the need to resort to more complicated AI tools.

What is your experience?

—Petr